Ableton is making history.

In the world of sound recording tools, the German music software company’s name, as well as the name of their flagship product, “Live,” may not be as ubiquitous as names like “Fender Stratocaster,” “Marshall amplifier,” or even “Pro Tools.” But in the world of computer-based music production, Ableton is a giant. Their fresh take on composition has earned their software a place in the arsenals of acts you perhaps wouldn’t expect, everyone from Flying Lotus and Daft Punk to Caribou and M83, Pete Townshend and Mogwai to DJ Rashad and D/P/I.

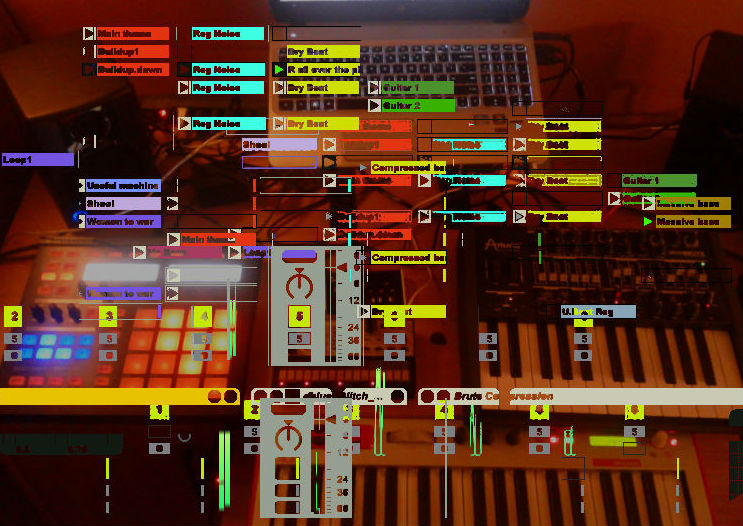

This far-reaching influence can be credited largely to Ableton Live’s groundbreaking interface. An extremely dense and powerful sampler at heart, Live subverts the normal linear-time-based recording process inherent to most Digital Audio Workstations (DAWs) with its Session View. This screen is a matrix of clip slots which allows users to place audio and MIDI loops in various patterns, then quickly and intuitively swap them in and out to create a live, organic performance. If a user prefers, they can lay material out on a traditional time-line recording view, but it’s the Session View that holds the true heart of Ableton Live and the creative spark that sets it apart from a sea of competing software. This creative setup allows artists to employ the software outside the well-trod corridors of electronic dance music. Perhaps more than any other comparable program, Live users constantly discover ways to use the program’s functionality as a creative tool in tandem with their outboard instruments, hardware, and bandmates.

The latest version, Ableton Live 9, released earlier this year, adds a feature that has the potential to revolutionize the way electronic musicians create: audio-to-MIDI conversion. Simply put, “MIDI” (Musical Instrument Digital Interface) is a system by which a piece of music’s notes are expressed mathematically. Take your favorite melody by Bach or Mozart: you can express the whole thing in terms of MIDI parameters like pitch, sequence, tempo, modulation, and velocity. Unlike normal musical notation, this MIDI information is mathematically specific and able to be interpreted by another MIDI instrument or software.

The latest version, Ableton Live 9, released earlier this year, adds a feature that has the potential to revolutionize the way electronic musicians create: audio-to-MIDI conversion.

Traditionally, the medium of MIDI in a computer environment worked one way: MIDI input to audio signal. A keyboard transmitting MIDI information could trigger a software-based synthesizer. MIDI sequences programmed by a musician could command computerized orchestras or drive external equipment. But with Live 9’s audio-to-MIDI feature, a user has the ability to extract this valuable note information from existing audio content and use it however they wish. Other software programs have attempted this feature in the past with varying results and, oftentimes, serious asking prices and/or a protracted workflow. Ableton is the first to incorporate the extraction process into such a creatively intuitive setup, not to mention fine-tuning the MIDI recognition technology until it was as close to perfect as we’ve seen a piece of software come yet.

All this revolution got me thinking about how important and useful MIDI technology really is, what a miracle it is that the music industry accepted it as a standard at all, and how striking it is that a 30-year-old communications protocol is still driving the ways bleeding-edge technological revolutions take shape. What is it about MIDI that’s so compelling to hardware and software developers?

More than anything, I began to wonder about the potential uses (and possible abuses) of Ableton Live’s audio-to-MIDI conversion feature. If anyone can extract note data from any outside source and reuse it to their own desire, will anyone have to actually play anymore? Are we witnessing the beginning of the end of real-world musicianship? For that matter, are we witnessing the end of musical originality?

“Are you ever going to use any of this stuff?”

I’ll admit it, I’m a bit of a pack rat. I’ll hang on to old shoe boxes, broken tools, threadbare clothes… it just always seems a shame to throw away something that could potentially be of further use some day. My wife is the opposite: a tidy German woman who loves to have clean, orderly surroundings. Although my penchant for messiness annoys her, she leaves my haphazardly stacked electronic gear and tangles of wires alone, as long as I relegate them to our basement, which we’ve unofficially designated my “studio.” I know it pains her to just know there’s such a ridiculous mess somewhere in the house, but she knows my space is important to me, and I appreciate her understanding. This arrangement has probably helped our marriage quite a bit: I can make as big a mess as I want so long as I keep it out of her view.

From time to time, though, my pack-rat-tendencies will get too intense and she’ll set off on a junk-purging binge. It’s usually triggered by something simple: opening up a cabinet door to have clumps of wires fall down on her, looking underneath a shelf to find a graveyard of dust-collecting guitar effects pedals… I believe my mistake this day was hiding the AA batteries she was looking for in a drawer buried beneath a mound of obsolete cables and connectors. There were old PS/2 connector computer mice, serial port monitor cables, 3.5mm data transfer wires for old Texas Instruments calculators, power adapters for lost and broken equipment, etc.

So when she stepped back from the pile with her hands on her hips, glaring at me, I felt a little sheepish and conciliatory.

“Really, if you’re never going to use this stuff ever again, you need to just throw it away!”

It’s true. There are a lot of cables and connectors I no longer need. I’ve been interested in electronic technology since I was young, especially music and computer technology, so I have amassed spiderwebs of wires and connectors, many currently sitting in varying states of uselessness.

From the very start of any hardware design, it seems one of the first priorities is figuring out how to make the device in question communicate with other devices. This is especially true with pieces of musical gear, which, to be useful in composition and in performance, require the ability to perform and respond immediately to signals from other pieces of musical gear. I’ve owned samplers, sequencers, synthesizers, drum machines, effects processors, and other units that have used everything from RCA to S/PDIF, 3.5 mm sync, SCSI, CV, 1/4” jacks, and XLR. Some of these are still in use in various parts of my studio, but most are collecting dust in the depths of my drawers, shelves, and filing cabinets. It seems a lot of these connection formats just fall out of favor for one reason or another and never show up again on new models.

These days, most computer gear is standardized to work off some version of USB, which can involve various interface sizes and versions depending on the technology and scale involved. However, in the realm of music gear, one format has existed for much longer and has established perhaps an even more consistent authority on the issue of unit-to-unit connection: MIDI. Almost every piece of electronic music gear I own has a MIDI port, and the technology hasn’t changed much in the last 30 years. This system of communication is so pervasive and reliable that it’s been widely accepted since before I was born, and no MIDI cables have ever made their way to my wire graveyards.

With the proliferation of electronic synthesizers in the 1960s and 70s, musicians suddenly had the ability to completely control the pitch, timbre, sequence, and tempo of any electronically synthesized sound. In fact, pieces traditionally requiring a drummer, a bassist, one or more guitarists, and any number of additional keyboardists could all be efficiently replicated through the use of careful programming and sequencing of synthesizers and drum machines. The real trick became figuring out how to get these units to speak with each other.

An electronic musician may have one synthesizer serving as a treble melodic lead, another as a foundational bass, and a third as a synthesized drum rack. In the early days, if this musician wanted all three to play together for a complete composition, it was easier said than done. Without three musicians to play each of these pieces of equipment collaboratively, it was a struggle to get them all to play the correct notes at the correct speed and consistently stay in time. One could set the BPM (Beats Per Minute) to the exact same figure on all three pieces of equipment and attempt to trigger them in unison, but minor imperfections in timing and sequencing would still result in the melodies drifting out of sync and key.

One of the first solutions to this problem was the Control Voltage/Gate system (referred to simply as “CV”). Through a system of electronic pulses and voltage changes, cords connected between the various instruments would dictate note pitch and timing information. This worked on vintage synthesizers to keep them relatively in time and in key, but there were limitations. First, the CV system was sometimes complicated to implement and relied on precisely-tuned equipment, which were often not standardized from manufacturer to manufacturer. Musicians who owned a synthesizer made by Korg and a drum machine made by Roland may not have been able to sync them up at all because of the different proprietary designs of the units. Secondly, even when the system worked perfectly, the electrical impulses could only control the pitch of a note and the points at which it started and stopped — nothing else. The abilities and possibilities of the format were very limited.

But in the late 70s and early 80s, engineers and designers for the American synthesizer company Sequential Circuits, Dave Smith and Chet Wood, developed a universal synthesizer interface that eventually came to be known as MIDI. Because MIDI connections were designed to work in a digital format, rather than CV’s electrical pulse, much more data in addition to note and gate could be transmitted, and at a faster rate than CV. MIDI transmissions consisted of multiple channels of information that users could run simultaneously, freely assign, and even capture and store with computer programs. This meant a musician with a single controller had the ability to play and manipulate multiple parameters — from pitch and timing to filter frequency, vibrato, amplification, and more — on multiple interconnected machines all at once. It also meant that any synthesizer program or structured composition could be reduced down to pure data, containing all the relevant note, sequence, and parameter information. In essence, any conceivable melody could be stored, reproduced, and shared simply as a compact file of MIDI values.

Perhaps even more essential to the development of the electronic music industry, Smith worked to get his new technology accepted as an industry-wide standard, thus allowing units from across the market to be usable together by any musician. He proposed establishing this standard at the 1981 meeting of the Audio Engineering Society, and over the next two years, representatives from American electronic instrument companies like Sequential Circuits, Oberheim Electronics, and Moog Music worked with their counterparts at Japanese firms such as Korg, Kawai, and Roland to make this communications format a convention on which they’d base future designs. This collaboration was historic in that it crossed both corporate and international lines to deliver a product measurably improved by standardization. Last year, Smith and Roland Corporation executive Ikutaro Kakehashi won a Technical Grammy Award for their contribution to the development of MIDI.

When the final format was announced and demonstrated for the public in 1983, it led to an explosion in sales and usage of synthesizers and other electronic musical equipment. For the first time, consumers could pick and choose their favorite gear from across various companies’ product lines, then link them together to quickly, easily, and reliably create rich and complicated compositions. Those compositions were stored on computers and shared either face-to-face or through electronic communication platforms that eventually evolved into the internet. The future of composition no longer rested on scribbling down dotted eighth notes in a graduate-level music theory class. Anyone electronically interested could simply plug in and jam away. The future seemed limitless.

At around the same time, a new kind of sonic equipment was making a giant splash in hip-hop and began slowly moving into other forms of music. Samplers, machines capable of capturing snippets of audio and rearranging them into custom sequences, allowed electronic musicians to pull drum beats and musical phrases from other records to form a new whole. A user could, for instance, sample a Led Zeppelin drum solo and repeat certain fills and phrases for rhythmic effect. Or they could simply extract each individual recorded drum hit and come up with a totally original beat using John Bonham’s thundering kit. Then the artist could take string swells and guitar chord hits from other records and layer them together, creating an interesting backing track for further manipulation or for a rap verse.

For a group seemingly obsessed with worrying about the sanctity of human music-making, this kind of technology has got to be artistically terrifying.

The act of sampling audio was nothing new in the world of professional recording studios and music production, but the “sampler” turned this technique into the basis for a single, enclosed, consumer-based, self-sufficient instrument. With time, these products became more compact, affordable, and useful for programming with the advent of recording quantization, pitch-shifting, intelligent chopping, mutatable effects, and, eventually, computer integration. Products like E-mu’s SP-1200 and Akai’s MPC series helped hip-hop artists create a sonic language that would eventually create a genre from scratch and change the course of the music industry.

Several concerns immediately popped up. Firstly, legal complications arose. Sampling musicians tended to rip their material from records that had copyrights, and those copyright infractions often translated into serious lawsuits for the artists and labels involved. One of the furthest-reaching, Campbell vs. Acuff-Rose Music, Inc., flamed up when the rap group 2 Live Crew sampled parts of Roy Orbison’s “Pretty Woman” without permission. It was eventually pursued by the Supreme Court, who held that 2 Live Crew’s use of the audio material, in the context of the ultimate resulting song, constituted parody and fair use, protected under the First Amendment. Subsequent use of recorded material in the music industry has often skirted the line between actual parody and simple reappropriation. For the most part, labels tended to attempt to “clear” samples — gain permission for use from the copyright holder, often in exchange for a fee or residual payment — rather than release a potentially offending (and lawsuit-inducing) product. For their part, many electronic artists have taken legality of the equation by chopping and manipulating samples until they are nearly unrecognizable. Others, the likes of Danger Mouse and Girl Talk, attack the legal question head-on by mashing readily-recognizable audio pieces together to form pastiche somewhere between an art project and a DJ set.

The debate over the legitimacy of sampling in popular music has raged for decades as new technology and new genres digest the practice. It’s become something of a favorite topic for legal scholars and cultural theorists alike. Arts and Sciences professor Dr. Andrew Goodwin wrote, in his 1990 academic paper, “Sample and hold: pop music in the digital age of reproduction,” that sample-based music represented an “orgy of pastiche,” “stasis of theft,” and even “crisis of authorship.” He went on to claim sample-based music “place[s] authenticity and creativity in crisis, not just because of the issue of theft, but through the increasingly automated nature of their mechanisms.” In short, Goodwin claimed that electronic samplers and sequencers did all the important work for a user, leaving the final product without the human spark of groove or true creativity.

In his 2001 New York Times article, “Strike the Band: Pop Music Without Musicians,” Tony Scherman attacked electronic music production as representing the evaporation of soul: “Digital music making represents an epochal rift in music-making styles, a final break with the once common-sense notion of music as something created, in real time, by a skilled practitioner, whose contribution presupposes a long, intimate and tactile relationship with an instrument.”

Later, he focused his ire specifically on the flourishing sampling genre, patronizingly lamenting, “If rock was conventionally modernist, its creators mining their souls in search of inspiration, then hip-hop and dance music, with their negation of traditional skills and rummage sale, frankly appropriative aesthetic, are pure postmodernism.”

Author and professor Dr. Tara Rodgers took direct aim at both Scherman and Goodwin in her 2003 academic paper “On the process and aesthetics of sampling in electronic music production.”

[Referring to Scherman] The author expresses nostalgia for a pre-digital era when skilled musicians played acoustic instruments in recording sessions,” she noted, “implying that digital instruments do not demand a comparable level of skill. And like Goodwin, Scherman confers authenticity on a pre-digital era when ‘technology’ supposedly did not intervene in musical process, despite the fact that musical instruments and music-making have always evolved in tandem with technological developments. To move beyond these incorrect, uninformed assumptions, it is productive to explore how digital music tools have their own accompanying sets of gestures and skills that musicians are continually exploring to maximize sonic creativity and efficiency in performance.

Rodgers went on to explore how the unique tactile qualities and mechanical operations of various electronic samplers and synthesizers inform how musicians interact with them, inspiring specific manual techniques that are just as significant as the development of the proper way to hold a guitar neck or sound a violin bow. She used the example of internet-based discussion groups figuring out a gestural workaround to solve the Yamaha RM1X sequencer’s inability to simultaneously mute specific tracks and change song sections. Ultimately, Rodgers pointed out how the interconnectivity and workflow of digital samplers and sequencers inspired an artistic method and aesthetic that was completely unique, musical, and legitimate.

Perhaps the best ground-up defense for the artistic virtue of digital sampling was coined by Joseph Schloss in his book Making Beats: The Art of Sample-Based Hip-Hop. Addressing the concerns of commentators like Scherman and Goodwin, Schloss said: “[This line of reasoning] contains the hidden predicate that music is more valuable than forms of sonic expression that are not music. If one believes that only live instruments can create music and that music is good, then sample-based hip-hop is not good, by definition. […] Creating an analogous argument about painting: if you believe that musicians should make their own sounds, then hip-hop is not music, but by the same token, if you believe that artists should make their own paint, then painting is not art [emphasis mine]. The conclusion, in both cases, is based on a preexisting and arbitrary assumption.”

It’s worth noting that whole generations of electronic musicians have directly challenged the validity of this debate. Avant-garde godfathers like Terry Riley and Steve Reich made entire compositions from tape loops: not discretely chopping and resequencing bits of audio to add to an existing piece or create a pleasant groove, but quickly repeating with a brutal staccato intensity and violently affecting the audio to create challenging soundscapes. Christian Marclay made experimental music by scratching and attacking a row of simultaneously-engaged vinyl record players. His technical mannerisms while twisting these discs into mountains of cacophony went on to largely inspire the sample pairing and rhythmic scratches of turntablism in hip-hop. (By the way, in support of Rodgers’ defense of the unique gestures and skills of sample-based electronic music, I’d like to see Scherman try to deny the masterful, uniquely-learned technique required for performances such as these.)

Significantly, these artists made no bones about using another artist’s sounds. They weren’t content to discretely swipe a drum beat or keyboard chord progression: they wanted to manipulate the final mastered recordings themselves in new and interesting ways. Composer John Oswald coined the term “plunderphonics” in the mid-80s to describe his confrontational approach to intentional reappropriation: taking whole tracks, from pop music to television commercials and educational programs, and contorting them into wart-covered perversions of their former selves. His work inspired a whole sub-genre of nightmarish mashups of unknown thrift-store trash audio, overly-known hit tracks, and (appropriately enough for this piece) MIDI reproductions of outside material. Artists inspired by the plunderphonics ethos intentionally brutalized outside audio as a form of artistic experimentation and protest against copyright legalities.

These days, the mostly-impenetrable microgenre vaporware seems to be carrying the tenants of the mostly analog-based plunderphonics artists from the 80s and 90s into the new, disposably digital age. Artists such as INTERNET CLUB, Vektroid (Laserdisc Visions, Macintosh Plus, 情報デスクVIRTUAL, etc.), and Computer Dreams had ripped emotionally void muzak from the deepest depths of the internet and proceeded to violate it with reverb, grain delay, resampling with horrific cassette equipment, and (again, apropos to this piece) the looping and BPM-shifting capabilities of software packages like Ableton Live.

Whether this amounts to new aesthetic statements of fidelity in the world of digitally hertz-based audio, dramatic statements on loneliness and disposability in the information age, or simply hipster buffoonery, it’s nice to see a new batch of confrontational weirdoes twisting audio to malevolent ends.

Since, even when they ignored the confrontational weirdoes, commentators like Goodwin and Scherman wrung their hands when technology allowed artists to cut and paste bits of audio for compositional purposes, think about the conniption fits they’ll go into when they’ve had the chance to digest Ableton Live 9’s audio-to-MIDI feature. Do you like a certain guitar solo? Not only can you now chop up and re-sequence the recording, but you can also extract the actual notes played and use them to drive a synthesizer or some other MIDI instrument. Like a particular beat? Ableton Live 9 has an audio-to-MIDI setting that recognizes the frequencies of various pieces of the drumset, sequences the MIDI data appropriately, and allows a user to swap in their own drum sounds. For a group seemingly obsessed with worrying about the sanctity of human music-making, this kind of technology has got to be artistically terrifying.

I recently spoke with Dennis DeSantis, Ableton’s Head of Documentation, about these concerns. He quickly tried to dispel these worries and insisted that Ableton’s real goal is to simply allow users to create music in a way they’ve never been able to before.

“The ethical questions around sampling predate Ableton,” DeSantis points out, “and any tools for manipulating digital media simply make easier what people were already doing in the analog domain anyway. Ableton makes tools for music production, with an emphasis on freedom, flexibility, and ease-of-use.”

I asked DeSantis if the company was worried about the unexplored legal ramifications of someone pulling note data from a copyrighted piece of material: extracting a MIDI melody from a Beatles song, for example. He replied, “Anything we could do that might restrict people from making ‘bad’ ethical decisions with third-party material would invariably detract from the usability of Live in other ways as well. We want to give more musicians more possibilities to do more things with musical material, and that’s pretty much all we think about.”

From what I’ve seen with the new audio-to-MIDI feature so far, I tend to agree that Ableton doesn’t have much to worry about. Early adopters who are already posting instructional videos on sites like YouTube have mostly kept the feature relegated to capturing and manipulating their own self-created MIDI data. Derek VanScoten, an electronic music producer who uses Ableton extensively, confirmed that, at least for him, the feature has more to do with workflow, utility, and inspiration than it does with trying to reap the reference of someone else’s famous melody.

“I’ve taken isolated Rhodes [electric piano] lines from a breakdown,” VanScoten recounted, “performed [audio-to-MIDI conversion], and then altered the key, changed it to an arpeggiator patch, and used it for a verse.” VanScoten also said his life as a commercial music artist was greatly improved by the feature: “I can take the client’s reference track, and then flip it just enough to make it work.”

The ethical questions around sampling predate Ableton, and any tools for manipulating digital media simply make easier what people were already doing in the analog domain anyway.

When I asked whether he had any reservations about other artists being irresponsible with the technology, he said happily, “[I] was really skeptical of audio-to-MIDI completely ruining the game. Now I’m really open to any form of creative genius.”

For his part, DeSantis pointed out that the audio-to-MIDI feature was designed in an effort to inspire artists to create sounds, not enable them to steal sounds. “[A] big goal for Live 9 was to focus on different ways to help musicians in the early stages of the music-making process — when ideas are coming quickly, but are also not necessarily fully-formed and are difficult to pin down,” DeSantis said. “For a lot of Live users, existing music (either from records or from their own instrumental or vocal recordings) can serve as the catalyst for a new song, and we wanted to provide a more flexible way to work with this material.”

DeSantis did want to make it clear that, although Ableton Live 9 is approaching sampling music in a different way, they still have a great respect for the art form. “I think of [Ableton Live 9’s audio-to-midi feature] as the next generation of sampling,” said DeSantis. “Some musicians sing or play guitar or program drum machines, but others work with existing material as their ‘instrument.’ Until audio-to-MIDI, this meant that these musicians were faced with a real limitation — the notes were inextricably bound to the sound itself. Now, you can take the notes and repurpose them with any sound. Additionally, because MIDI is an inherently more flexible medium than audio, you can also do things like revoice chords, or extract just a kick drum pattern from a breakbeat, etc. These are all creative possibilities that aren’t easily possible within the audio domain.”

And it’s true: these things simply weren’t possible before, and the fine-tuned ability to extract notes from a chord or melody allow those with a basic knowledge of composition and theory to approach their computer-based music in a much deeper way. Folks have been using Ableton’s audio tools to deconstruct inspirational music and learn from their conventions for years. Now, the audio-to-MIDI feature allows people to quickly integrate this learning process into their existing workflow.

According to VanScoten, “One [other way to use the feature] is for transcription. Sometimes I’ll take a really thick piano chord from a jazz record. I can usually hear about 90% of it, but sometimes audio-to-MIDI may help me fill in the final gaps.”

Perhaps the genius of the audio-to-MIDI feature is this focus on technical precision. The MIDI format established an elegant way to isolate individual musical components and reduce them to mathematical values. By employing this method in real-time conversion, Ableton has managed to sidestep criticisms of pastiche altogether. You can make a hip-hop song with the breakdown from Pink Floyd’s “Money,” and squinting social commentators can question whether your fans really like your original piece or just the original tune of “Money.” But if you extract those melodies and chords in the form of MIDI data and manipulate them to your heart’s content, you’ve employed outside material only as a source of inspiration, never imitation. Plus, you’ll probably (hopefully) fly under the radar of Pink Floyd’s copyright attorneys.

I recently spoke with Dave Smith, one of the creators of MIDI, about these new uses of his technology. In the late 80s, Smith moved on from Sequential Circuits and contributed to the development of other electronic instruments. Notably, he helped develop the first software-based synthesizer for use with a PC. Eventually, Smith established his own hardware instrument company under the name Dave Smith Instruments. Synthesizers like the Mopho, Prophet ‘08, and Prophet ‘12 — not to mention drum machines like the Tempest — have revitalized the hardware-focused community in recent years. However, he still has a lot to say about this cutting-edge software technology.

First of all, he’s much less forgiving on conventional audio-sampling musicians. “I think there’s a clear difference between what we might call ‘audio sampling’ and ‘note sampling,’” said Smith. “The former has been an ongoing issue for years and is more clearly a ripoff, as the samples get longer and are more easily identified.”

He does see the technical potential in products like Ableton Live 9. Smith went on to say, “Automatically recovering the notes, though, is something that has been done manually for years by musicians. We all used to listen to records, often slowed down, to learn a guitar or keyboard riff. Having an automatic method to provide what is basically sheet music doesn’t seem to have the same level of stealing as audio sampling. At worst it’s a shortcut […]”

However, it was clear Smith still prefers the “human” feeling of a person playing an instrument: “From my experience, playing MIDI files of a song, no matter how accurate, pales compared to the real thing. I’ve never been too interested in that application of MIDI!”

DeSantis, for his part, made sure to mention how unconventional uses of the audio-to-MIDI feature are producing results that surprised the developers and prove the technology can be pushed beyond just playing a simple MIDI file. “One thing that we hear a lot, and that’s really exciting, is that people often get inspiring results from inaccurate conversions,” DeSantis said, “Some recordings (like full mixes, for example) are outside of what these tools are meant to do. But of course people are converting them anyway, and often end up finding amazing passages of new music that then become the start of a great song. The feature is generally really accurate when used with well-recorded, simple material. But it’s always nice to hear that people are also getting great results by using the feature ‘wrong.’”

Much like with Dr. Rodger’s example of the Yamaha RM1X, Ableton Live 9 users are using their ingenuity and community discourse to develop techniques for the technology beyond the designers’ imaginations. The user base is already developing their own language and methodology around this feature, constantly bumping into interesting possibilities through continued exploration and experimentation. VanScoten happily quipped, “We all have our ‘happy mistakes.’”

As we pulled onto the interstate, my father-in-law asked me seriously, “So, what is it that you’re doing when you’re pressing buttons on that thing?”

My own father was generally horrified with most forms of electrified and electronic music, so he and I didn’t have much to talk about when it came to the music I consumed and created. When I was 15 or so, he came home early to find me pressing my electric guitar’s pickups against the face of my cranked-up amplifer to generate squalls of feedback, one of my first experiments with “noise music” before I had learned there was an official genre. He was expectedly dumbfounded and enraged: “Why would you choose to listen to racket?” Hearing my high school rock band perform with our electric guitars and drums, he commented that the whole thing just sounded like a noisy mess to him. The few times he heard some of the synthesized electronic and dance music I began to explore, he shook his head with disbelief: “It’s fake. It sounds artificial.”

So on this recent late afternoon, when my father-in-law asked me an open-ended question as we drove to a family dinner, I stammered a bit for a response. He had recently attended a show I played at a local bar, performing electronic dance music produced with Ableton Live and controlled with an Akai APC40, a dedicated Ableton hardware controller. He was curious how the ways I pressed buttons, moved sliders, and twisted knobs affected the sounds he heard. Apart from EDM-initiated friends and bandmates, I had never been asked a detailed question about electronic music before — certainly never from someone I considered an “elder.” The question had always been “Why are you doing that?,” never, “How do you do that?” [Note: To be fair, unlike my father, my father-in-law never had to contend with coming through the door after a long day of work to the unrelenting squall of a maladjusted teenager intentionally and unaccountably creating feedback noise with a guitar amplifier.]

While my father-in-law isn’t necessarily a lover of electronic music, he does have an analytic mind and a deep appreciation of computers. He’s worked closely with IT for decades, seeing operating system after operating system succeed one another and appreciating the improvements each new method of computation brought. As I described the powers Ableton Live gave me — chopping audio, rearranging audio, extracting MIDI information, using that MIDI information to drive synthesizers, sampling those synth sounds, etc. — he nodded and asked clarifying questions occasionally about how the computer program accomplished all these tasks. At the end of our conversation, he said with happy wonder, “So you can pretty much make whatever sound you want, huh?” Whether or not electronic music is necessarily his favorite genre, he clearly appreciated the technical victories that made such things possible.

Debates over the artistic validity of reappropriating samples will never die. For every artist who uses chopped audio to create compelling compositions, there’s an academic pundit who calls that process creatively bankrupt and a lawyer insisting it’s a statutory infraction. Using the cast of characters from my personal experience, I attribute this naysaying attitude to people like my father, who need to hear and see a wood-and-metal instrument played with a live human’s fingers or lungs to appreciate sound as legitimate music. I’d prefer more people think like my father-in-law, who appreciates the wealth of opportunities afforded by advancements in technology and will give a listen to whatever resulting pieces strike his fancy, no matter how they were created.

In this ongoing cultural argument, Ableton Live 9 and other software programs that pioneered the relevant technology have found a “middle way.” Beyond simply giving users ways to divide and resequence audio files, this ability to reuse a piece of audio’s musical core, expressed as MIDI data, allows a musician to get all the inspirational benefits of traditional audio sampling with few of the legal and artistic concerns. By using MIDI data instead of the actual audio file, we can remove the tone and timbre from music and use the actual notes to drive our own sounds. We can strip away the skin of sound and use only the skeleton as inspiration. And, with the ability to modify the MIDI note data manually, we can even restructure this skeleton, creating a beast hardly resembling its ancestor. The MIDI extraction process gives us all the inspiration and none of the theft.

Ableton is one company among many who have pursued audio-to-MIDI as a technological advantage. However, Live 9 is unique in that it incorporates this technology into a purposefully fluid, improvisational, performance-oriented workflow. Perhaps more than any other platform currently on the market, it uses audio-to-MIDI conversion to achieve the kind of organic musical technique Dr. Rodgers wrote about in her defense of electronic music. As DeSantis and VanScoten point out, musicians are already experimenting and forming their own styles and modes with the conversion process, pushing it beyond the imagination of the original developers. In this way, the software is leading to a musical discipline that, in keeping with Rodgers’ arguments, is just as tactile, creative, and valid as physical instrument practices like fingerboard placement and proper drum stick grip.

I hope others follow Ableton into this territory and give musicians new, engaging ways to take advantage of the infinitely usable MIDI format. By doing so, these software developers will be doing more than just creating powerful computer programs. In giving users the ability to develop their own musical techniques, they will create new software-based instruments, capable of all the nuance and discipline of their wood-and-metal counterparts.

Special thanks to Dave Smith, Derek VanScoten, and Ableton representatives Cole Goughary and Dennis DeSantis for their assistance and cooperation.