Ableton is making history.

In the world of sound recording tools, the German music software company’s name, as well as the name of their flagship product, “Live,” may not be as ubiquitous as names like “Fender Stratocaster,” “Marshall amplifier,” or even “Pro Tools.” But in the world of computer-based music production, Ableton is a giant. Their fresh take on composition has earned their software a place in the arsenals of acts you perhaps wouldn’t expect, everyone from Flying Lotus and Daft Punk to Caribou and M83, Pete Townshend and Mogwai to DJ Rashad and D/P/I.

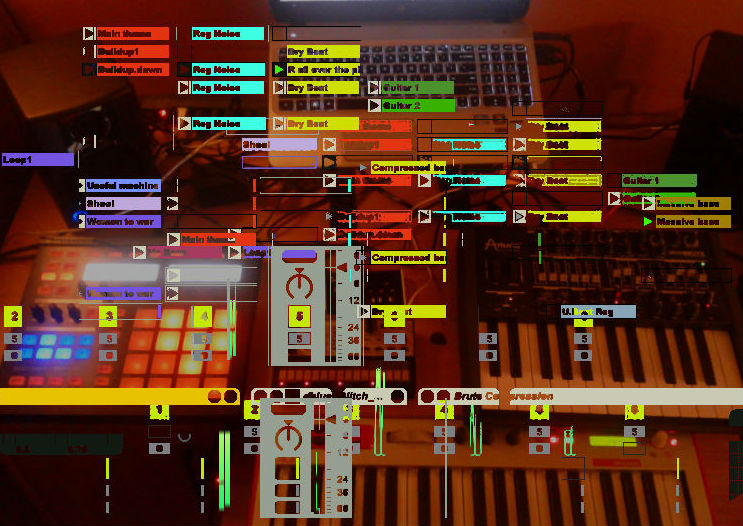

This far-reaching influence can be credited largely to Ableton Live’s groundbreaking interface. An extremely dense and powerful sampler at heart, Live subverts the normal linear-time-based recording process inherent to most Digital Audio Workstations (DAWs) with its Session View. This screen is a matrix of clip slots which allows users to place audio and MIDI loops in various patterns, then quickly and intuitively swap them in and out to create a live, organic performance. If a user prefers, they can lay material out on a traditional time-line recording view, but it’s the Session View that holds the true heart of Ableton Live and the creative spark that sets it apart from a sea of competing software. This creative setup allows artists to employ the software outside the well-trod corridors of electronic dance music. Perhaps more than any other comparable program, Live users constantly discover ways to use the program’s functionality as a creative tool in tandem with their outboard instruments, hardware, and bandmates.

The latest version, Ableton Live 9, released earlier this year, adds a feature that has the potential to revolutionize the way electronic musicians create: audio-to-MIDI conversion. Simply put, “MIDI” (Musical Instrument Digital Interface) is a system by which a piece of music’s notes are expressed mathematically. Take your favorite melody by Bach or Mozart: you can express the whole thing in terms of MIDI parameters like pitch, sequence, tempo, modulation, and velocity. Unlike normal musical notation, this MIDI information is mathematically specific and able to be interpreted by another MIDI instrument or software.

The latest version, Ableton Live 9, released earlier this year, adds a feature that has the potential to revolutionize the way electronic musicians create: audio-to-MIDI conversion.

Traditionally, the medium of MIDI in a computer environment worked one way: MIDI input to audio signal. A keyboard transmitting MIDI information could trigger a software-based synthesizer. MIDI sequences programmed by a musician could command computerized orchestras or drive external equipment. But with Live 9’s audio-to-MIDI feature, a user has the ability to extract this valuable note information from existing audio content and use it however they wish. Other software programs have attempted this feature in the past with varying results and, oftentimes, serious asking prices and/or a protracted workflow. Ableton is the first to incorporate the extraction process into such a creatively intuitive setup, not to mention fine-tuning the MIDI recognition technology until it was as close to perfect as we’ve seen a piece of software come yet.

All this revolution got me thinking about how important and useful MIDI technology really is, what a miracle it is that the music industry accepted it as a standard at all, and how striking it is that a 30-year-old communications protocol is still driving the ways bleeding-edge technological revolutions take shape. What is it about MIDI that’s so compelling to hardware and software developers?

More than anything, I began to wonder about the potential uses (and possible abuses) of Ableton Live’s audio-to-MIDI conversion feature. If anyone can extract note data from any outside source and reuse it to their own desire, will anyone have to actually play anymore? Are we witnessing the beginning of the end of real-world musicianship? For that matter, are we witnessing the end of musical originality?

“Are you ever going to use any of this stuff?”

I’ll admit it, I’m a bit of a pack rat. I’ll hang on to old shoe boxes, broken tools, threadbare clothes… it just always seems a shame to throw away something that could potentially be of further use some day. My wife is the opposite: a tidy German woman who loves to have clean, orderly surroundings. Although my penchant for messiness annoys her, she leaves my haphazardly stacked electronic gear and tangles of wires alone, as long as I relegate them to our basement, which we’ve unofficially designated my “studio.” I know it pains her to just know there’s such a ridiculous mess somewhere in the house, but she knows my space is important to me, and I appreciate her understanding. This arrangement has probably helped our marriage quite a bit: I can make as big a mess as I want so long as I keep it out of her view.

From time to time, though, my pack-rat-tendencies will get too intense and she’ll set off on a junk-purging binge. It’s usually triggered by something simple: opening up a cabinet door to have clumps of wires fall down on her, looking underneath a shelf to find a graveyard of dust-collecting guitar effects pedals… I believe my mistake this day was hiding the AA batteries she was looking for in a drawer buried beneath a mound of obsolete cables and connectors. There were old PS/2 connector computer mice, serial port monitor cables, 3.5mm data transfer wires for old Texas Instruments calculators, power adapters for lost and broken equipment, etc.

So when she stepped back from the pile with her hands on her hips, glaring at me, I felt a little sheepish and conciliatory.

“Really, if you’re never going to use this stuff ever again, you need to just throw it away!”

It’s true. There are a lot of cables and connectors I no longer need. I’ve been interested in electronic technology since I was young, especially music and computer technology, so I have amassed spiderwebs of wires and connectors, many currently sitting in varying states of uselessness.

From the very start of any hardware design, it seems one of the first priorities is figuring out how to make the device in question communicate with other devices. This is especially true with pieces of musical gear, which, to be useful in composition and in performance, require the ability to perform and respond immediately to signals from other pieces of musical gear. I’ve owned samplers, sequencers, synthesizers, drum machines, effects processors, and other units that have used everything from RCA to S/PDIF, 3.5 mm sync, SCSI, CV, 1/4” jacks, and XLR. Some of these are still in use in various parts of my studio, but most are collecting dust in the depths of my drawers, shelves, and filing cabinets. It seems a lot of these connection formats just fall out of favor for one reason or another and never show up again on new models.

These days, most computer gear is standardized to work off some version of USB, which can involve various interface sizes and versions depending on the technology and scale involved. However, in the realm of music gear, one format has existed for much longer and has established perhaps an even more consistent authority on the issue of unit-to-unit connection: MIDI. Almost every piece of electronic music gear I own has a MIDI port, and the technology hasn’t changed much in the last 30 years. This system of communication is so pervasive and reliable that it’s been widely accepted since before I was born, and no MIDI cables have ever made their way to my wire graveyards.

With the proliferation of electronic synthesizers in the 1960s and 70s, musicians suddenly had the ability to completely control the pitch, timbre, sequence, and tempo of any electronically synthesized sound. In fact, pieces traditionally requiring a drummer, a bassist, one or more guitarists, and any number of additional keyboardists could all be efficiently replicated through the use of careful programming and sequencing of synthesizers and drum machines. The real trick became figuring out how to get these units to speak with each other.

An electronic musician may have one synthesizer serving as a treble melodic lead, another as a foundational bass, and a third as a synthesized drum rack. In the early days, if this musician wanted all three to play together for a complete composition, it was easier said than done. Without three musicians to play each of these pieces of equipment collaboratively, it was a struggle to get them all to play the correct notes at the correct speed and consistently stay in time. One could set the BPM (Beats Per Minute) to the exact same figure on all three pieces of equipment and attempt to trigger them in unison, but minor imperfections in timing and sequencing would still result in the melodies drifting out of sync and key.

One of the first solutions to this problem was the Control Voltage/Gate system (referred to simply as “CV”). Through a system of electronic pulses and voltage changes, cords connected between the various instruments would dictate note pitch and timing information. This worked on vintage synthesizers to keep them relatively in time and in key, but there were limitations. First, the CV system was sometimes complicated to implement and relied on precisely-tuned equipment, which were often not standardized from manufacturer to manufacturer. Musicians who owned a synthesizer made by Korg and a drum machine made by Roland may not have been able to sync them up at all because of the different proprietary designs of the units. Secondly, even when the system worked perfectly, the electrical impulses could only control the pitch of a note and the points at which it started and stopped — nothing else. The abilities and possibilities of the format were very limited.

But in the late 70s and early 80s, engineers and designers for the American synthesizer company Sequential Circuits, Dave Smith and Chet Wood, developed a universal synthesizer interface that eventually came to be known as MIDI. Because MIDI connections were designed to work in a digital format, rather than CV’s electrical pulse, much more data in addition to note and gate could be transmitted, and at a faster rate than CV. MIDI transmissions consisted of multiple channels of information that users could run simultaneously, freely assign, and even capture and store with computer programs. This meant a musician with a single controller had the ability to play and manipulate multiple parameters — from pitch and timing to filter frequency, vibrato, amplification, and more — on multiple interconnected machines all at once. It also meant that any synthesizer program or structured composition could be reduced down to pure data, containing all the relevant note, sequence, and parameter information. In essence, any conceivable melody could be stored, reproduced, and shared simply as a compact file of MIDI values.